Model-Based Testing in React with State Machines

Testing applications is crucially important to ensuring that the code is error-free and the logic requirements are met. However, writing tests manually is tedious and prone to human bias and error. Furthermore, maintenance can be a nightmare, especially when features are added or business logic is changed. We’ll learn how model-based testing can eliminate the need to manually write integration and end-to-end tests, by automatically generating full tests that keep up-to-date with an abstract model for any app.

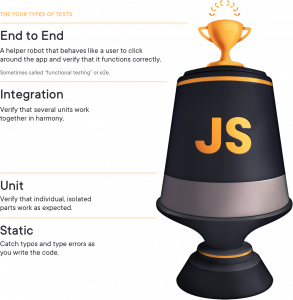

From unit tests to integration tests, end-to-end tests, and more, there are many different testing methods that are important in the development of non-trivial software applications. They all share a common goal, but at different levels: ensure that when anyone uses the application, it behaves exactly as expected without any unintended states, bugs, or worse, crashes.

Kent C. Dodds describes the practical importance of writing these tests in his article, Write tests. Not too many. Mostly integration. Some tests, like static and unit tests, are easy to author, but don’t completely ensure that every unit will work together. Other tests, like integration and end-to-end (E2E) tests, take more time to author, but give you much more confidence that the application will work as the user expects, since they replicate scenarios similar to how a user would use the application in real life.

So why are there never many integration nor E2E in applications nowadays, yet hundreds (if not thousands) of unit tests? The reasons range from not enough resources, not enough time, or not enough understanding of the importance of writing these tests. Furthermore, even if numerous integration/E2E tests are written, if one part of the application changes, most of those long and complicated tests need to be rewritten, and new tests need to be written. Under deadlines, this quickly becomes infeasible.

From Automated to Autogenerated

The status-quo of application testing is:

- Manual testing, where no automated tests exist, and app features and user flows are tested manually

- Writing automated tests, which are scripted tests that can be executed automatically by a program, instead of being manually tested by a human

- Test automation, which is the strategy for executing these automated tests in the development cycle.

Needless to say, test automation saves a lot of time in executing the tests, but the tests still need to be manually written. It would sure be nice to tell some sort of tool: “Here is a description of how the application is supposed to behave. Now generate all the tests, even the edge cases.”

Thankfully, this idea already exists (and has been researched for decades), and it’s called model-based testing. Here’s how it works:

- An abstract “model” that describes the behavior of your application (in the form of a directed graph) is created

- Test paths are generated from the directed graph

- Each “step” in the test path is mapped to a test that can be executed on the application.

Each integration and E2E test is essentially a series of steps that alternate between:

- Verify that the application looks correct (a state)

- Simulate some action (to produce an event)

- Verify that the application looks right after the action (another state)

If you’re familiar with the given-when-then style of behavioral testing, this will look familiar:

- Given some initial state (precondition)

- When some action occurs (behavior)

- Then some new state is expected (postcondition).

A model can describe all the possible states and events, and automatically generate the “paths” needed to get from one state to another, just like Google Maps can generate the possible routes between one location and another. Just like a map route, each path is a collection of steps needed to get from point A to point B.

Integration Testing Without a Model

To better explain this, consider a simple “feedback” application. We can describe it like so:

- A panel appears asking the user, “How was your experience?”

- The user can click “Good” or “Bad”

- When the user clicks “Good,” a screen saying “Thanks for your feedback” appears.

- When the user clicks “Bad,” a form appears, asking for further information.

- The user can optionally fill out the form and submit the feedback.

- When the form is submitted, the thanks screen appears.

- The user can click “Close” or press the Escape key to close the feedback app on any screen.

See the Pen

Untitled by David Khourshid(@davidkpiano)

on CodePen.

Manually Testing the App

The @testing-library/react library makes it straightforward to render React apps in a testing environment with its render() function. This returns useful methods, such as:

getByText, which identifies DOM elements by the text contained inside of thembaseElement, which represents the rootdocument.documentElementand will be used to trigger akeyDowneventqueryByText, which will not throw an error if a DOM element containing the specified text is missing (so we can assert that nothing is rendered)

import Feedback from './App';

import { render, fireEvent, cleanup } from 'react-testing-library';

// ...

// Render the feedback app

const {

getByText,

getByTitle,

getByPlaceholderText,

baseElement,

queryByText

} = render(<Feedback />);

// ...More information can be found in the @testing-library/react documentation. Let’s write a couple integration tests for this with Jest (or Mocha) and @testing-library/react:

import { render, fireEvent, cleanup } from '@testing-library/react';

describe('feedback app', () => {

afterEach(cleanup);

it('should show the thanks screen when "Good" is clicked', () => {

const { getByText } = render(<Feedback />);

// The question screen should be visible at first

assert.ok(getByText('How was your experience?'));

// Click the "Good" button

fireEvent.click(getByText('Good'));

// Now the thanks screen should be visible

assert.ok(getByText('Thanks for your feedback.'));

});

it('should show the form screen when "Bad" is clicked', () => {

const { getByText } = render(<Feedback />);

// The question screen should be visible at first

assert.ok(getByText('How was your experience?'));

// Click the "Bad" button

fireEvent.click(getByText('Bad'));

// Now the form screen should be visible

assert.ok(getByText('Care to tell us why?'));

});

});Not too bad, but you’ll notice that there’s some repetition going on. At first, this isn’t a big deal (tests shouldn’t necessarily be DRY), but these tests can become less maintainable when:

- Application behavior changes, such as adding a new steps or deleting steps

- User interface elements change, in a way that might not even be a simple component change (such as trading a button for a keyboard shortcut or gesture)

- Edge cases start occurring and need to be accounted for.

Furthermore, E2E tests will test the exact same behavior (albeit in a more realistic testing environment, such as a live browser with Puppeteer or Selenium), yet they cannot reuse the same tests since the code for executing the tests is incompatible with those environments.

The State Machine as an Abstract Model

Remember the informal description of our feedback app above? We can translate that into a model that represents the different states, events, and transitions between states the app can be in; in other words, a finite state machine. A finite state machine is a representation of:

- The finite states in the app (e.g.,

question,form,thanks,closed) - An initial state (e.g.,

question) - The events that can occur in the app (e.g.,

CLICK_GOOD,CLICK_BADfor clicking the good/bad buttons,CLOSEfor clicking the close button, andSUBMITfor submitting the form) - Transitions, or how one state transitions to another state due to an event (e.g., when in the

questionstate and theCLICK_GOODaction is performed, the user is now in thethanksstate) - Final states (e.g.,

closed), if applicable.

The feedback app’s behavior can be represented with these states, events, and transitions in a finite state machine, and looks like this:

A visual representation can be generated from a JSON-like description of the state machine, using XState:

import { Machine } from 'xstate';

const feedbackMachine = Machine({

id: 'feedback',

initial: 'question',

states: {

question: {

on: {

CLICK_GOOD: 'thanks',

CLICK_BAD: 'form',

CLOSE: 'closed'

}

},

form: {

on: {

SUBMIT: 'thanks',

CLOSE: 'closed'

}

},

thanks: {

on: {

CLOSE: 'closed'

}

},

closed: {

type: 'final'

}

}

});

If you’re interested in diving deeper into XState, you can read the XState docs, or read a great article about using XState with React by Jon Bellah. Note that this finite state machine is used only for testing, and not in our actual application — this is an important principle of model-based testing, because it represents how the user expects the app to behave, and not its actual implementation details. The app doesn’t necessarily need to be created with finite state machines in mind (although it’s a very helpful practice).

Creating a Test Model

The app’s behavior is now described as a directed graph, where the nodes are states and the edges (or arrows) are events that denote the transitions between states. We can use that state machine (the abstract representation of the behavior) to create a test model. The @xstate/graph library contains a createModel function to do that:

import { Machine } from 'xstate';

import { createModel } from '@xstate/test';

const feedbackMachine = Machine({/* ... */});

const feedbackModel = createModel(feedbackMachine);This test model is an abstract model which represents the desired behavior of the system under test (SUT) — in this example, our app. With this testing model, test plans can be created which we can use to test that the SUT can reach each state in the model. A test plan describes the test paths that can be taken to reach a target state.

Verifying States

Right now, this model is a bit useless. It can generate test paths (as we’ll see in the next section) but to serve its purpose as a model for testing, we need to add a test for each of the states. The @xstate/test package will read these test functions from meta.test:

const feedbackMachine = Machine({

id: 'feedback',

initial: 'question',

states: {

question: {

on: {

CLICK_GOOD: 'thanks',

CLICK_BAD: 'form',

CLOSE: 'closed'

},

meta: {

// getByTestId, etc. will be passed into path.test(...) later.

test: ({ getByTestId }) => {

assert.ok(getByTestId('question-screen'));

}

}

},

// ... etc.

}

});Notice that these are the same assertions from the manually written tests we’ve created previously with @testing-library/react. The purpose of these tests is to verify the precondition that the SUT is in the given state before executing an event.

Executing Events

To make our test model complete, we need to make each of the events, such as CLICK_GOOD or CLOSE, “real” and executable. That is, we have to map these events to actual actions that will be executed in the SUT. The execution functions for each of these events are specified in createModel(…).withEvents(…):

import { Machine } from 'xstate';

import { createModel } from '@xstate/test';

const feedbackMachine = Machine({/* ... */});

const feedbackModel = createModel(feedbackMachine)

.withEvents({

// getByTestId, etc. will be passed into path.test(...) later.

CLICK_GOOD: ({ getByText }) => {

fireEvent.click(getByText('Good'));

},

CLICK_BAD: ({ getByText }) => {

fireEvent.click(getByText('Bad'));

},

CLOSE: ({ getByTestId }) => {

fireEvent.click(getByTestId('close-button'));

},

SUBMIT: {

exec: async ({ getByTestId }, event) => {

fireEvent.change(getByTestId('response-input'), {

target: { value: event.value }

});

fireEvent.click(getByTestId('submit-button'));

},

cases: [{ value: 'something' }, { value: '' }]

}

});Notice that you can either specify each event as an execution function, or (in the case of SUBMIT) as an object with the execution function specified in exec and sample event cases specified in cases.

From Model To Test Paths

Take a look at the visualization again and follow the arrows, starting from the initial question state. You’ll notice that there are many possible paths you can take to reach any other state. For example:

- From the

questionstate, theCLICK_GOODevent transitions to… - the

formstate, and then theSUBMITevent transitions to… - the

thanksstate, and then theCLOSEevent transitions to… - the

closedstate.

Since the app’s behavior is a directed graph, we can generate all the possible simple paths or shortest paths from the initial state. A simple path is a path where no node is repeated. That is, we’re assuming the user isn’t going to visit a state more than once (although that might be a valid thing to test for in the future). A shortest path is the shortest of these simple paths.

Rather than explaining algorithms for traversing graphs to find shortest paths (Vaidehi Joshi has great articles on graph traversal if you’re interested in that), the test model we created with @xstate/test has a .getSimplePathPlans(…) method that generates test plans.

Each test plan represents a target state and simple paths from the initial state to that target state. Each test path represents a series of steps to get to that target state, with each step including a state (precondition) and an event (action) that is executed after verifying that the app is in the state.

For example, a single test plan can represent reaching the thanks state, and that test plan can have one or more paths for reaching that state, such as question -- CLICK_BAD ? form -- SUBMIT ? thanks, or question -- CLICK_GOOD ? thanks:

testPlans.forEach(plan => {

describe(plan.description, () => {

// ...

});

});We can then loop over these plans to describe each state. The plan.description is provided by @xstate/test, such as reaches state: "question":

// Get test plans to all states via simple paths

const testPlans = testModel.getSimplePathPlans();And each path in plan.paths can be tested, also with a provided path.description like via CLICK_GOOD ? CLOSE:

testPlans.forEach(plan => {

describe(plan.description, () => {

// Do any cleanup work after testing each path

afterEach(cleanup);

plan.paths.forEach(path => {

it(path.description, async () => {

// Test setup

const rendered = render(<Feedback />);

// Test execution

await path.test(rendered);

});

});

});

});Testing a path with path.test(…) involves:

- Verifying that the app is in some

stateof a path’s step - Executing the action associated with the

eventof a path’s step - Repeating 1. and 2. until there are no more steps

- Finally, verifying that the app is in the target

plan.state.

Finally, we want to ensure that each of the states in our test model were tested. When the tests are run, the test model keeps track of the tested states, and provides a testModel.testCoverage() function which will fail if not all states were covered:

it('coverage', () => {

testModel.testCoverage();

});Overall, our test suite looks like this:

import React from 'react';

import Feedback from './App';

import { Machine } from 'xstate';

import { render, fireEvent, cleanup } from '@testing-library/react';

import { assert } from 'chai';

import { createModel } from '@xstate/test';

describe('feedback app', () => {

const feedbackMachine = Machine({/* ... */});

const testModel = createModel(feedbackMachine)

.withEvents({/* ... */});

const testPlans = testModel.getSimplePathPlans();

testPlans.forEach(plan => {

describe(plan.description, () => {

afterEach(cleanup);

plan.paths.forEach(path => {

it(path.description, () => {

const rendered = render(<Feedback />);

return path.test(rendered);

});

});

});

});

it('coverage', () => {

testModel.testCoverage();

});

});This might seem like a bit of setup, but manually scripted integration tests need to have all of this setup anyway, in a much less abstracted way. One of the major advantages of model-based testing is that you only need to set this up once, whether you have 10 tests or 1,000 tests generated.

Running the Tests

In create-react-app, the tests are ran using Jest via the command npm test (or yarn test). When the tests are ran, assuming they all pass, the output will look something like this:

PASS src/App.test.js

feedback app

✓ coverage

reaches state: "question"

✓ via (44ms)

reaches state: "thanks"

✓ via CLICK_GOOD (17ms)

✓ via CLICK_BAD → SUBMIT ({"value":"something"}) (13ms)

reaches state: "closed"

✓ via CLICK_GOOD → CLOSE (6ms)

✓ via CLICK_BAD → SUBMIT ({"value":"something"}) → CLOSE (11ms)

✓ via CLICK_BAD → CLOSE (10ms)

✓ via CLOSE (4ms)

reaches state: "form"

✓ via CLICK_BAD (5ms)

Test Suites: 1 passed, 1 total

Tests: 9 passed, 9 total

Snapshots: 0 total

Time: 2.834sThat’s nine tests automatically generated with our finite state machine model of the app! Every single one of those tests asserts that the app is in the correct state and that the proper actions are executed (and validated) to transition to the next state at each step, and finally asserts that the app is in the correct target state.

These tests can quickly grow as your app gets more complex; for instance, if you add a back button to each screen or add some validation logic to the form page (please don’t; be thankful the user is even going through the feedback form in the first place) or add a loading state submitting the form, the number of possible paths will increase.

Advantages of Model-Based Testing

Model-based testing greatly simplifies the creation of integration and E2E tests by autogenerating them based on a model (like a finite state machine), as demonstrated above. Since manually writing full tests is eliminated from the test creation process, adding or removing new features no longer becomes a test maintenance burden. The abstract model only needs to be updated, without touching any other part of the testing code.

For example, if you want to add the feature that the form shows up whether the user clicks the “Good” or “Bad” button, it’s a one-line change in the finite state machine:

// ...

question: {

on: {

// CLICK_GOOD: 'thanks',

CLICK_GOOD: 'form',

CLICK_BAD: 'form',

CLOSE: 'closed',

ESC: 'closed'

},

meta: {/* ... */}

},

// ...All tests that are affected by the new behavior will be updated. Test maintenance is reduced to maintaining the model, which saves time and prevents errors that can be made in manually updating tests. This has been shown to improve efficiency in both developing and testing production applications, especially as used at Microsoft on recent customer projects — when new features were added or changes made, the autogenerated tests gave immediate feedback on which parts of the app logic were affected, without needing to manually regression test various flows.

Additionally, since the model is abstract and not tied to implementation details, the exact same model, as well as most of the testing code, can be used to author E2E tests. The only things that would change are the tests for verifying the state and the execution of the actions. For example, if you were using Puppeteer, you can update the state machine:

// ...

question: {

on: {

CLICK_GOOD: 'thanks',

CLICK_BAD: 'form',

CLOSE: 'closed'

},

meta: {

test: async (page) => {

await page.waitFor('[data-testid="question-screen"]');

}

}

},

// ...const testModel = createModel(/* ... */)

.withEvents({

CLICK_GOOD: async (page) => {

const goodButton = await page.$('[data-testid="good-button"]');

await goodButton.click();

},

// ...

});And then these tests can be run against a live Chromium browser instance:

The tests are autogenerated the same, and this cannot be overstated. Although it just seems like a fancy way to create DRY test code, it goes exponentially further than that — autogenerated tests can exhaustively represent paths that explore all the possible actions a user can do at all possible states in the app, which can readily expose edge-cases that you might not have even imagined.

The code for both the integration tests with @testing-library/react and the E2E tests with Puppeteer can be found in the the XState test demo repository.

Challenges to Model-Based Testing

Since model-based testing shifts the work from manually writing tests to manually writing models, there is a learning curve. Creating the model necessitates the understanding of finite state machines, and possibly even statecharts. Learning these are greatly beneficial for more reasons than just testing, since finite state machines are one of the core principles of computer science, and statecharts make state machines more flexible and scalable for complex app and software development. The World of Statecharts by Erik Mogensen is a great resource for understanding and learning how statecharts work.

Another issue is that the algorithm for traversing the finite state machine can generate exponentially many test paths. This can be considered a good problem to have, since every one of those paths represents a valid way that a user can potentially interact with an app. However, this can also be computationally expensive and result in semi-redundant tests that your team would rather skip to save testing time. There are also ways to limit these test paths, e.g., using shortest paths instead of simple paths, or by refactoring the model. Excessive tests can be a sign of an overly complex model (or even an overly complex app ?).

Write Fewer Tests!

Modeling app behavior is not the easiest thing to do, but there are many benefits of representing your app as a declarative and abstract model, such as a finite state machine or a statechart. Even though the concept of model-based testing is over two decades old, it is still an evolving field. But with the above techniques, you can get started today and take advantage of generating integration and E2E tests instead of manually writing every single one of them.

More resources:

I gave a talk at React Rally 2019 demonstrating model-based testing in React apps:

Slides: Slides: Write Fewer Tests! From Automation to Autogeneration

- Library: @xstate/test

- Community: Statecharts (spectrum.chat)

- Article: The Challenges and Benefits of Model-Based Testing (SauceLabs)

Happy testing!

The post Model-Based Testing in React with State Machines appeared first on CSS-Tricks.