Today, We’re talking about Next.js. What is it, and where might it fit into our web development workflow? I spoke to co-creator Guillermo Rauch to find out.

Show Notes

Weekly Update

Transcript

Drew McLellan: He’s the founder and CEO of Vercel, a cloud platform for static sites that fits around a Jamstack workflow. He’s also the co-creator of Next.js. He previously founded LearnBoost and CloudUp, and is well-known as the creator of several popular node open source libraries like Socket.io, Mongoose, and SlackIn. Prior to that, he was a core developer on MooTools, so we know he knows his way around JavaScript like the back of his hand. Did you know he once received a royal commission from the King of Spain to create an ice sculpture out of iceberg lettuce? My smashing friends, please welcome Guillermo Rauch. Hi Guillermo, how are you?

Guillermo Rauch: I’m smashing freaking good, thanks for having me.

Drew: I wanted to talk to you today about the whole world of Next.js, as it’s something that obviously you’re personally very knowledgeable about, having been involved as a co-creator right from the start. Next.js is one of those project names that has been on my radar while working in the Jamstack space, but it isn’t something that I’ve actually personally looked at or worked with too closely before. For people who are like me, who perhaps aren’t aware of what Next.js is, perhaps you could give us a bit of background into what it is and what problems it tries to solve.

Guillermo: Next.js is a very interesting member of the Jamstack universe, because Next.js actually started being a fully SSR-focused framework. It started getting a lot of adoption outside the Jamstack space where people were building very large things specifically when they wanted to have user generated content or dynamic content or social networks or e-commerce, and they knew that they wanted SSR because their data set was very large or very dynamic. It fell under the radar I think for a lot of people in the Jamstack world, but later on Next.js gained the capabilities for static optimization.

Guillermo: On one hand, for example, if you wouldn’t do data fetching at the top level of your page with Next.js, your React page would be … Also by the way, for those who are not fully in the know, Next.js is simply React framework for production, but has this capability of doing some rendering. Then when you get in static optimization capabilities, if you wouldn’t define data fetching at the top level of your page, it automatically exported as HTML instead of trying to do anything with server rendering.

Guillermo: Then later on, it also gained the capability for static site generation, meaning that you can define a special data hook, but that data hook gets data at build time. Next.js became a hybrid, very powerful dynamic and static framework, and now it’s been growing a lot in the Jamstack space as well.

Drew: People might say that React is already a framework, you certainly hear it described that way. What does it actually mean to be a framework for React?

Guillermo: That’s a great observation, because I always point out to people that React at Facebook and React outside of Facebook are completely different beasts. React at Facebook actually is used together with server rendering, but even their server rendering, for example, doesn’t use Node.js, it uses a highly specialized virtual machine called Hermes which communicates to their sort of production hack and PHP stack and answers all this advanced and exotic Facebook needs.

Guillermo: When they open source React, it’s almost like open sourcing a component. I always call it like open sourcing the engine, but not giving you the car. What happened is people really wanted to go and drive with it, they wanted to get to places with React. In the community, people started creating cars, and they would embed React as the engine, which was what the driver, the developer was after in the first place, make React the fundamental part of the car. Things like Next.js and Gatsby and React Static and many other frameworks started appearing that were solving the need for like, “I actually want to create fully loaded pages and applications.”

Guillermo: Whereas React was kind of more like the component and the engine for specific widgets within the page, this was certainly the case for Facebook. They will broadly and publicly admit that they invented it for things like the notification batch, the chat widget, the newsfeed component, and those components were React routes that were embedded into the contents of the production existing app with lots and lots of lines of code and even other JS libraries and frameworks.

Guillermo: What it means to create a framework for React, it means you make React the fundamental part of the story, hopefully and this is something we’ll try to do with Next.js, the learning curve is primarily about React with some added surface for Next.js, particularly around data fetching and routing. We also do a lot of production optimizations, so when you get React, when you get Create React app, which is sort of like, I like to call it a bootstrapped car that Facebook gives you, maybe the needs for production are not really met. Or if you try to do it yourself by configuring Webpack and configuring Babel and configuring server rendering and static generation, it’s also hard to put together a car from scratch. Next.js will give you this zero config and also production optimized set of defaults around building entire big things with React.

Drew: So it’s like it almost puts a sort of ecosystem around your React app with a collection of pre-configured tools to enable you to-

Guillermo: Correct.

Drew: Hit the ground running and do static site generation or server rendering or routing.

Guillermo: Correct, and you used a word there that is very, very key to all this, which is pre-configured. We’re fortunate enough to draw the attention of Google Chrome as a contributor to Next.js. One of the leaders of this project, her thing is that when they were working on frameworks internally at Google, they faced a lot of the same problems that the community and open source are facing today. There were many different competing initiatives at Google on how to scale and make really performant web apps out of the box.

Guillermo: You would join as a Googler and you would be given a framework with which you would create really big production ready, very high performance applications. Shubie was part of a lot of those initiatives, and what she found is that there’s two key ingredients to making a framework succeed at scale. One is pre-configuration, meaning that you come to work, you’re going to start a brand new app, you should be given something that is already ready to go and meets a lot of the production demands that are known at that given point in time.

Guillermo: On the other hand, the other really important step that we’re working towards is conformance. You can be given the most highly optimized production ready pre-configured framework, but if you go ahead and, for example, start introducing lots of heavy dependencies or third party scripts or use very inefficient layouts that take a long time to paint and so on and so forth, then you’re going to make that pre-configuration sort of go to waste. By mixing pre-configuration with conformance over time, the developer is not only having a great starting point, but it’s also guided to success over time.

Drew: It seems that a characteristic of Next.js, that it’s quite opinionated, the UI layer is React, it uses type script, uses Webpack, and those are all choices that the project has made and that’s what you get. Correct me if I’m wrong, but you couldn’t swap out React for Vue, for example, within Next.js.

Guillermo: That’s a good point, where technical decision making meets sort of an art. On one hand, I’d really like to claim that Next is very unopinionated, and the reason for this is that if you specifically go to github.com/vercel/nextjs and the examples directory, you’ll see that there’s almost like a combinatoric explosion of technologies that you can use together with Next.js. You’ll see fire-based, you’ll see Graphic UL, you’ll see Apollo, you’ll see Redux, you’ll see MobX, in the CSS space there’s even more options.

Guillermo: We have a default CSS port that’s embedded, but then you can use two flavors of it, one with import, one with style tags which we call Style JSX, which resembles very much like the web platform approach to Shadow CSS. The reason I mean unopinionated is we want Next.js to stay very close to the “bare metal” of the web, and not introduce lots of primitives that if the web from 10 years from today would be incompatible with. Then if you look at the examples, you’ll see that there’s all these other technologies that you can plug in.

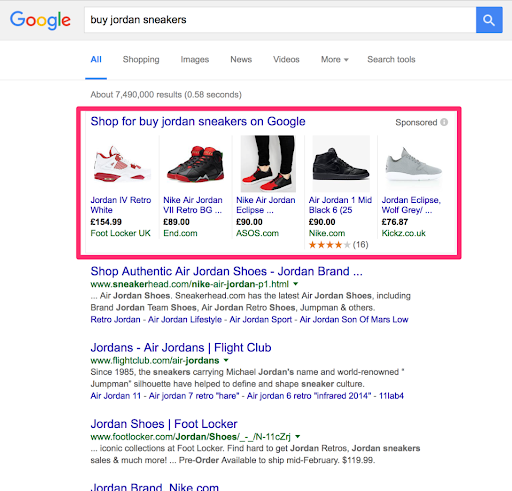

Guillermo: The base level of opinionation is that there is React and you’re not going to be able to replace it, at least anytime soon. Then there is the concept of you should be able to create pages, and this was kind of like a new thing when we first launched it, which was everyone is trying to create single-page applications. What we realized is like the internet is made up of websites with lots of pages that create distinct entry points via search engines, via Twitter, via Facebook, via social networks, via email companions, like you always guide the person toward an entry point, and that person that comes through that entry point shouldn’t have to download the burden of the entirety of the application.

Guillermo: Then that path led us to implementing server rendering, then static generation for multiple pages, et cetera, et cetera. That other base level of opinionation is Next should be a framework that works for the web, not against the web. Then on top of that, React was missing data fetching and routing primitives, and we added those. There’s a level of opinionation that has to deal with like everybody needs a router, so might as well have a router built in by default.

Drew: The big advantage of having those defaults is it takes away a lot of the complexity of choice, that it’s just there, it’s configured, and you can just start using it without needing to think too much, because I think we’ve all-

Guillermo: Exactly.

Drew: Been in situations where there are far too many choices of what components to use, and it can be overwhelming and get in the way of being productive.

Guillermo: Exactly.

Drew: What sort of projects do you see people using Next.js for? Is it for basically any situation where you might build a production React app, or is it more suited to particular types of content heavy sites? Does it matter in that sense?

Guillermo: Yeah, so this has been an age old debate of the web, is the web for apps, is the web for sites, is it a hybrid? What is the role of JavaScript, et cetera, et cetera? It’s kind of hard to give a straight up answer, but my take on this is the web was evolved always to be a hybrid of content that is evolving to be more and more dynamic and personal to the user. Even when you say like a content website, the high end content websites of the world have code bases that are very much comparable to apps.

Guillermo: A great example here is like New York Times, they’ll give you embedded widgets with data analysis tools and interactive animation, and they’ll recommend what story to read next, and they have a subscription model built in which sometimes gives you part of the content and sometimes counts how many articles you’ve read. Like if I told you this when the web was invented, like Tim Berners-Lee would be like, “No, that’s crazy, that’s not possible on the web,” but that’s the web we have today.

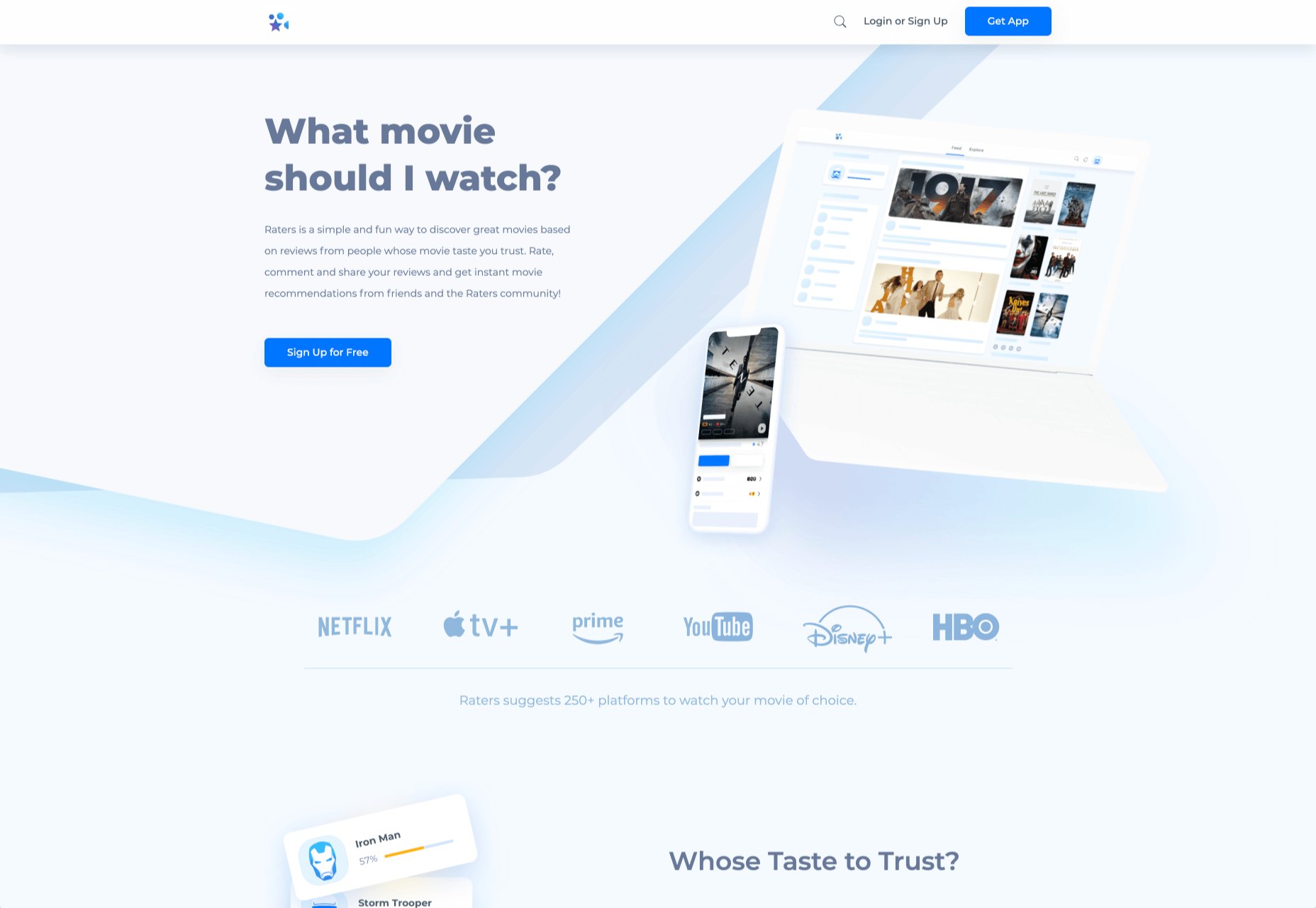

Guillermo: Next.js is answering a lot of these complex modern needs, which means you’ll see lots of e-commerce usage, you’ll see lots of content with that. E-commerce meaning, by the way, not just like buy items, but experiences like the largest real estate websites on the web, realtor.com, zillow.com, trulio.com, that entire category is all Next.js, then content sites. We just onboarded washingtonpost.com as a customer of Vercel and Next.js, we have then a third category that is more emergent but very interesting, which is full apps and user-generated content, like tiktok.com, and kind of like you would think the original single-page application use case as well being quite represented there.

Guillermo: Next.js sort of shines when you need to have lots of content that has to be served very, very quickly, has to be SEO optimized, and at the end of the day, it’s a mix of dynamic and static.

Drew: I’ve previously spoken to Marcy Sutton about Gatsby, which seems to be in a similar sort of space. It’s always great to see more than one solution to a problem and having choice for one project to the next. Would you say that Next.js and Gatsby are operating in the same sort of problem space, and how similar or dissimilar are they?

Guillermo: I think there’s an overlap for some use cases. For example, my personal blog rauchg.com runs on Next.js, it could’ve just been a great Gatsby blog as well. There is that overlap in the smaller static website sort of space, and by small I don’t mean not relevant. A lot of dotcoms that are super, super important run on basically static web, so that’s the beauty of Jamstack in my opinion. Because Next.js can statically optimize your pages and then you can get great Lighthouse scores through that, you can use it for overlapping use cases.

Guillermo: I think the line gets drawn when you start going into more dynamic needs and you have lots of pages, you have the need to update them at one time. Although Gatsby is creating solutions for those, Next.js already has production ready live solutions that work with any sort of database, any sort of data backend for basically “generating” or “printing” lots and lots of pages. That’s where today customers are going to Next.js instead of Gatsby.

Drew: One of the problems that everyone seems to run into as their JavaScript-based solution gets bigger is performance and how things can start getting pretty slow, you have big bundle sizes. Traditionally, things like code splitting can be fairly complex to get configured correctly. I see that’s one of the features that jumped out at me of Next.js, that it claims that the code splitting is automatic. What does Next.js do in terms of code splitting to make that work?

Guillermo: Your observation is 100% right. One of the biggest things with the web and one of the biggest weights on the web is JavaScript, and just like different materials have different densities and weights irrespective of the actual physical volume, JavaScript tends to be a very dense, heavy element. Even small amounts of JavaScript compared to, like for example, images that can be processed asynchronously and off the main thread, JavaScript tends to be particularly bothersome.

Guillermo: Next.js has invested a tremendous amount of effort into automatically optimizing your bundles. The first one that was my first intuition when I first came up with the idea for Next.js was you’re going to define, for example, 10 routes. In the Next.js world you create a pages directory and you drop your files in there Index.js, About.js, Settings.js, Dashboard.js, Termsofservice.js, Signup.js, Login.js. Those become entry points to your application that you can share through all kinds of media.

Guillermo: When you enter those, we want to give you JS that is relevant for that page first and foremost, and then perhaps a common bundle so that subsequent navigations within the system are very snappy. Next.js also, which is very, very nice, automatically pre-fetches the rest of the pages that are connected to that entry point, such that it feels like a single-page application. A lot of people say like, “Why not just use Create React app if I know that I have maybe a couple routes?” I always tell them, “Look, you can find those as pages, and because Next.js will automatically pre-fetch ones that are connected, you end up getting your single-page application, but it’s better optimized with regards to that initial paint, that initial entry point.”

Guillermo: That was the initial code splitting approach, but then it became a lot more sophisticated over time. Google contributed a very nice optimization called Module and No Module, which will give differential JS to modern browsers, and legacy JS that’s heavy with polyfields to other browsers, and this optimization 100% automated and produces massive savings. We gave it to one of our customers that we host on Vercel called Parnaby’s, I believe if I’m not mistaken, it was something very, very significant. It was maybe like 30% savings in code sizes, and that was just because they upgraded Next.js to a version that optimized JS bundles better.

Guillermo: That was kind of the point that we were going over earlier, which is you choose Next.js and it only gets better and more optimal over time, it’ll continue to optimize things on your behalf. Those are, again, pre-configurations that you would never have to deal with or be bothered with, and the research of which you don’t ever even want to do, to be honest. Like I wasn’t obviously very involved with this, but I look at some of the internal discussions and they were discussing all these polyfields that only mattered to Internet Explorer X and Soho, I was like, “I don’t even want to know, let’s just upgrade Next.js and get all these benefits.”

Drew: There is sometimes great benefits on there with sticking with the defaults and sticking with the most common configuration of things, which seems to be really the Next.js approach. I remember when I started writing PHP back in the early 2000s, and everybody was using PHP and MySQL, and at the time I’d just come from Windows so I wanted to use PHP and Microsoft Sequel Server. You can do it, but you’re swimming against the tide the whole way. Then as soon as I just switched over to MySQL, the whole ecosystem just started working for me and I didn’t need to think about it.

Guillermo: Yeah, everything just clicks, that is such a great observation. We see that all the time, like the Babel ecosystem is so powerful now that you could become, for example, a little bit faster by swapping Babel for something else, but then you trade off that incredible ecosystem compatibility. This is something you touched on performance earlier, and like for a lot of people, build performance and static generation performance is a big bottleneck, and this is something that we are very diligent in improving the performance of our tools incrementally.

Guillermo: For example, one of the things that Next.js is doing now is that it’s upgrading its default from Webpack 4 to Webpack 5, which has some breaking things, and that’s why we’re first offering it to people as an opt-in flag, but later on it’ll become the default. Webpack 5 makes incredible performance improvements, but we’re not sacrificing the Webpack ecosystem, we incrementally improved. Sure, there were some very small things that needed to be sacrificed, but that’s an incredible benefit of the JS ecosystem today that a lot of people are glossing over, I think, because maybe they see, “Oh, we could’ve done X in Soho, maybe it was a little faster, or maybe MPM in Soho would take less time.” They pick up some details and they miss the bigger picture, which is the ecosystem value is enormous.

Drew: The value of having all the configuration and the maintenance and that side of it done by a project like Next.js rather than taking that on yourself by swapping to using something else is incredible, because as soon as you move away from those defaults, you’re taking on the burden of keeping all the compatibilities going and doing it yourself. One of the things that I’ve been really interested in with Next.js is there are options available for either doing static site generation or server-side rendering, or maybe a hybrid of the two perhaps. I think there’s been some recent changes to this in a recent update, can you tell us a little bit about that and when you might choose one or the other?

Guillermo: Yeah, for sure. One of the key components of this hybrid approach combined with the page system that I described earlier is that you can have pages that are fully static or pages that server rendered. A page that’s fully static has the incredible benefit of what I call static hoisting, which is you can take that asset and automatically put it at the edge. By putting it at the edge, I mean you can cache it, you can preemptively cache it, you can replicate it, you can make it so that when a request comes in, it never touches the server because we know ahead of time, “Hey, Slash Index is a static.”

Guillermo: That’s a very, very interesting benefit when it comes down to serving global audiences. You basically get an automatic CDN out of the box, especially when you deploy the modern edge networks like Vercel or AWS Amplify or Netlify and so on. Next.js has this premise of if it can be made static, it should be static. When you’re first starting a project and you’re working on your first page or you’re kicking the tires of the framework, might as well make everything static.

Guillermo: Even for high end needs, so for example, vercel.com, our own usage of Next.js is fully static. It’s a combination of fully static and static site generation, so all our marketing pages are static, our blog is statically generated from a dynamic data source, and then our dashboard which has lots of dynamic data, but we can deliver it as shells or skeletons, all the pages associated with viewing your deployments, viewing your projects, viewing your logs, et cetera, et cetera, are all basically static pages with client-side JavaScript.

Guillermo: That serves us incredibly well because everything where we need a very fast first-pane performance is already pre-rendered, everything that needs SEO, already pre-rendered, and everything that’s extremely dynamic, we only have to worry about security, for example, from the perspective of the client side which uses the same API calls that, for example, our CLI used or our integrations use, et cetera, et cetera. A fully static website, very cheap to operate, incredibly scalable and so on and so forth.

Guillermo: Now, one particular thing that we needed with our blog was we wanted to update the data very quickly. We wanted to fix a typo very quickly and not wait for an entire build to happen, and this is a very significant benefit of Next.js, that as you straddle from a static to a dynamic, it gives you these in between solutions as well. For our blog we used incremental static generation, so essentially we can rebuild one page at a time when the underlying content changes.

Guillermo: Imagine that we had not just a couple hundred blog posts and we had lots of blog posts being generated all the time and being updated all the time, like I mentioned one of our customers, Washington Post, in that case you need to go more toward full SSR, especially as you start customizing the content for each user. That journey of complexity that I just described started from I have one marketing page, to I have a blog that has a couple thousand pages, to I have tens of thousands or millions of pages. That’s the Next.js journey that you can traverse with your own business.

Guillermo: Then you start as a developer to choose between perhaps less responsibility to more responsibility, because when you opt in to using SSR, you’re now executing code on the server, you’re executing code on the cloud, there’s more responsibility with more power. The fact that you can decide where you use each kind of tool is I think a very, very interesting benefit of Next.

Drew: Just in practicalities of combining the static site generation and the server-side rendering, how does that work in terms of the server element? Are you needing a dedicated platform like Vercel to be able to achieve that, or is that something that can be done more straightforwardly and more simply?

Guillermo: Next.js gives you a dev server, so you download Next and you run your Next Dev, and that’s the dev server. The dev server is obviously incredibly optimized for development, like it has the latest fast refresh technology that Facebook released, where … Actually, Facebook didn’t release it, Facebook uses it internally to get the best and most performant and most reliable hot module replacement, such that you’re basically typing and it changes are reflecting on the screen, so that’s the dev server.

Guillermo: Then Next gives you a production server called Next Start, and Next Start has all the capabilities of the framework for self-hosting. The interesting thing about Vercel is that when you deploy Next to it, it gets automatically optimized and it’s 100% serverless, meaning there’s no responsibility whatsoever of administration, scaling, cashing and cashing validation, purging, replication, global fail over and so on and so forth that you would have to take on when you run Next Start yourself.

Guillermo: That’s also the great benefit of Next.js, so for example, apple.com has several different properties, subdomains and pages on dotcom on Next.js they self-host, due to very, very advanced and stringent security and privacy needs. On the other hand, washingtonpost.com uses Vercel, so we have this sort of wide range of users, and we’re extremely happy to support all of them. The nice thing about where serverless is headed in my opinion is it can give you best of both worlds in terms of the most optimal performance that only gets better over time, with the best developer experience of like, “Hey, I don’t have to worry about any sort of infrastructure.”

Drew: The Next.js is an open source project that’s being developed by the team at Vercel. Are there other contributors outside of Vercel?

Guillermo: Yeah, so Google Chrome being the main one that actively submit server PRs, help us with optimizations and testing it with partners, like very large Next.js users that are already part of the Google ecosystem, for example, due to using lots and lots of apps, so they need to be involved closely as partners. Facebook, we maintain a great relationship with the Facebook team. For example, fast refresh, we were the first React framework to land that, and they helped guide us through all the things that they learned of using React and fast refresh at Facebook.

Guillermo: We work with lots of partners that have very large deployments of Next.js apps in the wild from all kinds of different sort of use cases, like imagine e-commerce and content. Then there’s just lots and lots of independent contributors, people that use Next.js personally, but also educators and members of front infrastructure teams at large companies. It’s a very, very wide community effort.

Drew: It sounds like the concern that somebody might have, that this is being developed in a significant part by Vercel, that they might have the concern that they’re going to get sort of locked into deploying on that particular platform, but it sounds very much like that’s not the case at all, and they could develop a site and deploy it on Firebase or Netlify or…

Guillermo: Yeah, absolutely. I like to compare it a lot for like the Kubernetes of the Front End age in a way, because at the end of the day I am a firm believer that … Kubernetes is something that pretty much almost everyone needs when they need to run Linux processes, like you were talking about opinionation and you’re saying it’s a good technology, it’s very much not opinionated, but there is some opinionation that we kind of forget about. It’s like at the end of the day, it grew out of running a specific demons of Linux programs packaged as containers.

Guillermo: Next is in a similar position, because what we take for being the universal primitive of the world as a React component, obviously it’s opinionated, but we do think that for lots of enterprises, just like they all gravitate towards Linux, we are seeing the same thing towards React and Vue, but Vue luckily has Nuxt too, which is a very awesome solution, it’s equivalent in ideation and principles as Next. We’re gravitating towards these platforms like Next.js, like Nuxt, like Sapper for the Svelte ecosystem.

Guillermo: I think these should be open platforms, because again, if everybody’s going to need this, might as well not reinvent the wheel across the entire industry, right? We very much welcome that position, we welcome people to deploy it and reconfigure it and rebuild it and redistribute it and so on.

Drew: Just recently a new version of Next.js was released, I think version 9.5. What significant changes were there in that release?

Guillermo: The most awesome one is, as I was saying earlier, a lot of things start static and then become more dynamic as things grow. This was the venture for WordPress, by the way. WordPress in the beginning was based on a static file database approach, and then grew into needing a database, kind of like what you described with how PHP evolved to be more and more MySQL. What’s nice about Next.js 9.5 is that it makes incremental static generation a production ready feature, so we took it out of the unstable flag.

Guillermo: This feature allows you to make that journey from static to dynamic without giving up on all the static benefits, and without having to go full for server-rendered dynamic, so it stretches the useful lifetime of your sort of static. The way we use it at Vercel, for example, as I mentioned, it’s like our blog gets fully pre-rendered at build time, but then for example, we’re actually in a couple minutes about to make a major announcement, and when we blog about it we want to be able to tweak it, fix it, preview it, et cetera without having to issue a five to 10-minute build every time we change one letter of one blog post.

Guillermo: With incremental static generation, you can rebuild one page at a time. What could take minutes or even seconds, depending on how big your site is, now takes milliseconds. Again, you didn’t have to give up on any of the benefits of static. That’s perhaps the thing I’m most excited about that went stable on Next.js 9.5, and specifically because the JS community and the React community and the framework community and static site generated community have been talking about this unicorn of making a static incremental for a long time, so the fact that Next.js did it, it’s being used in production and it’s there for everybody to use, I think it’s a major, major, major milestone.

Guillermo: There’s lots of little DX benefits. One that’s really nice in my opinion is Next.js, as I said, has a page system. You would argue, “Okay, so let’s say that I’m uber.com and I’ve decided to migrate on Next.js, do I need to migrate every URL inside over to Next.js in order to go live?” This has become a pretty important concern for us, because lots of people choose Next.js, but they already are running big things, so how do you reconcile the two?

Guillermo: One of the things that Next.js allows you to do in 9.5 is you can say, “I want to handle all new pages that I created with Next.js with Next.js, and the rest I want to hand off to a legacy system.” That allows you incremental, incremental is the keyword here today, incremental adoption of Next.js. You can sort of begin to strangle your legacy application with your Next.js optimized application one page at a time, when you deploy and you introduce in your Next.js page, it gets handled by Next. If it doesn’t match the Next.js routing system, it goes to the legacy system.

Drew: That sounds incredibly powerful, and the incremental rendering piece of that, I can think of several projects immediately that would really benefit that have maybe 30-minute build times for fixing a typo, as you say. That sort of technology is a big change.

Guillermo: We talked to one of the largest, I believe, use cases in Jamstack in the wild, and it was basically a documentation website and their build times were 40 minutes. We’re doing a lot in this space, by the way, like we’re making pre-rendering a lot faster as well. One of my intuitions for years to come is that as platforms get better, as the primitives get better, as the build pipelines get better we’re going to continue to extend the useful lifetime of statics. Like what ended up taking 40 minutes is going to take four.

Guillermo: A great example is we’re rolling out an incremental build cache, as well, system. I sort of pre-announced it on Twitter the other day, we’re already seeing 5.5 times faster incremental builds. One of the things that I like about Jamstack is that the core tenet is pre-render as much as possible. I do think that’s extremely valuable, because when you’re pre-rendering you’re not rendering just in time at runtime. Like what otherwise the visitor would incur in in terms of rendering costs on the server gets transferred to build time.

Guillermo: One of the most exciting things that’s coming to Next is that without you doing anything as well, the build process is also getting faster. On the Vercel side, we’re also taking advantage of some new cloud technology to make pre-rendering a lot faster as well. I think we’re always going to live in this hybrid world, but as technology gets better, build times will get better, pre-rendering will get better and faster, and then you’ll have more and more opportunities to do kind of a mix of the two.

Drew: Sounds like there’s some really exciting things coming in the future for Next.js. Is there anything else we should know before we sort of go away and get started working with Next.js?

Guillermo: Yeah. I think for a lot of people for whom this is new, you can go to nextjs.org/learn, it’ll walk you through building your first small static site with Next.js, and then it’ll walk you through the journey of adding more and more complexity over time, so it’s a really fun tutorial. I recommend also staying tuned for our announcement that I was just starting to share on twitter.com/vercel, where we share a lot of Next.js news. Specifically we highlight a lot of the work that’s being done on our open source projects and our community projects and so on. For myself as well, twitter.com/rauchg if you want to stay on top of our thoughts on the ecosystem.

Drew: I’ve been learning all about Next.js today, what have you been learning about lately, Guillermo?

Guillermo: As a random tangent that I’ve been learning about, I decided to study more economics, so I’ve been really concerned with like what is the next big thing that’s coming in terms of enabling people at scale to live better lives. I think we’re going through a transition period, especially in the US, of noticing that a lot of the institutions that people were “banking on”, like the education system, like the healthcare system, a lot of those, like where you live and whether you’re going to own a house or rent and things like that, a lot of these things are changing, they have changed rapidly, and people have lost their compass.

Guillermo: Things like, “Oh, should I go to college? Should I get a student loan?” and things like that, and there is a case to be made for capitalism 3.0, and there is a case to be made for next level of evolution in social and economic systems. I’ve been just trying to expand my horizons in learning a lot more about what could be next, no pun intended. I’ve found there’s lots of great materials and lots of great books. A lot of people have been thinking about this problem, and there is lots of interesting solutions in the making.

Drew: That’s fascinating. If you, dear listener, would like to hear more from Guillermo, you can find him on Twitter at @RauchG, and you can find more about Next.js and keep up to date with everything that goes on in that space at nextjs.org. Thanks for joining us today, Guillermo. Do you have any parting words?

Guillermo: No, thank you for having me.